Docker Architecture :

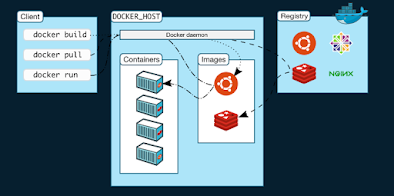

Below architecture diagram taken reference from Docker official site.

In this post we will discuss about the Docker Architecture. Docker architecture will works on client-servers model.

Below are the components of Docker architecture.

- Docker Client

- Docker Daemon

- Docker Registry

lets go to the deeper in to the concepts.

Docker Client :

Docker client will provide user interface to interact with the docker Daemon. (some thing like the way shell will interact with kernel.)

it means that, to pulling /pushing the images, to creating the containers, we have to tell the Docker Daemon through the docker commands which are issued from the docker client. so that docker daemon will perform the actual tasks like pulling /pushing the images, creating the containers, and so on ..

When ever install the Docker software we will get installed both (Docker Client and Docker Daemon) by default. we seen in the output of the docker version command.

The Docker Client and Docker Daemon communicate using a REST API calls, over unix sockets or a network interface.

Docker Daemon(OR) Docker Engine(OR) Docker Host :

Docker daemon(dockerd) listens for Docker API requests and manages Docker objects such as images, containers, networks, and volumes. A daemon can also communicate with other daemons to manage Docker services.

Docker Registry:

A Docker Registry stores Docker images. Docker Hub is a public registry that anyone can use, and Docker is configured to look for images on Docker Hub by default. we can even run our own private registry(Harbor).

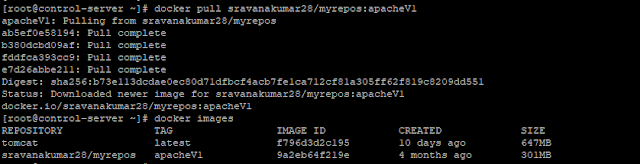

When we use the docker pull command issued from Docker_client , its first intact with docker daemon and daemon will helps go get the Images downloaded in to the Docker_Host from the Docker Registry(Docker Hub)

Docker_Host is nothing but VM or Machine or Server where the Docker daemon or Docker_Engine is running.

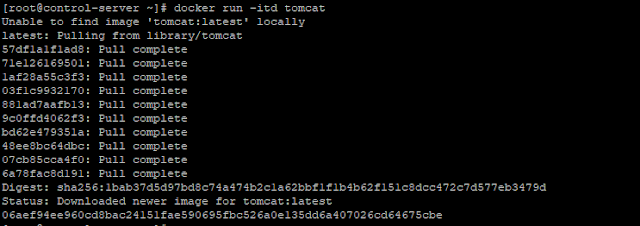

and same way if we issue the command docker run , its again interact with daemon, daemon will search for the image locally in the local registry, if the image is there it will run the image. if image is not present locally, images are pulled from our configured registry.

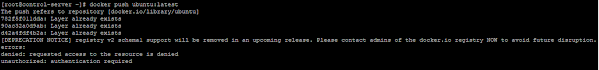

When we use the docker push command, same way our image will pushed to our configured registry.

Now, lets get in to the Lab exercise.

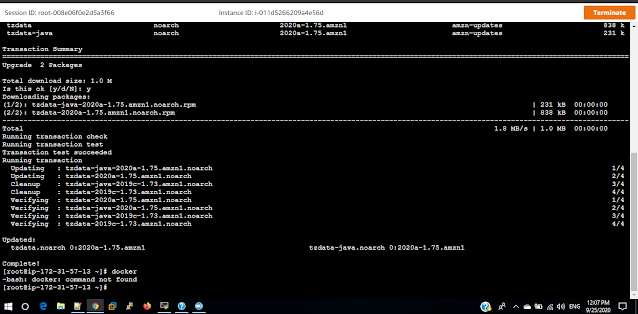

Docker Installation steps discussed in the previous post. please follow those steps

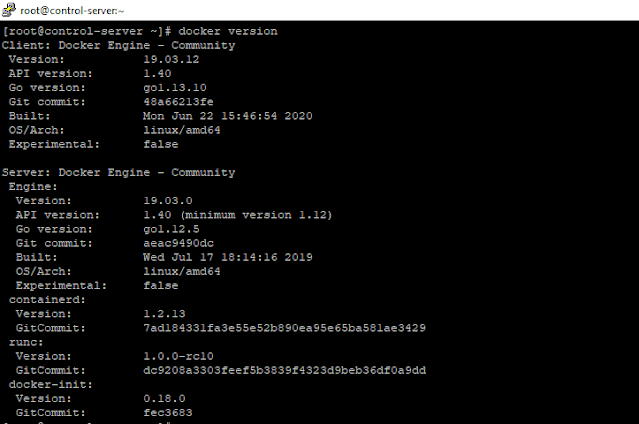

once installation is done, we have to check the Version by docker version command

in the out put we could see that 2 sessions called as Client and Server

Client refers for Docker Client and Server refers for Docker Daemon.

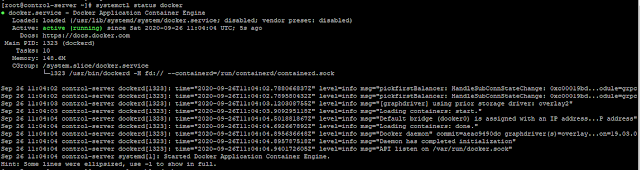

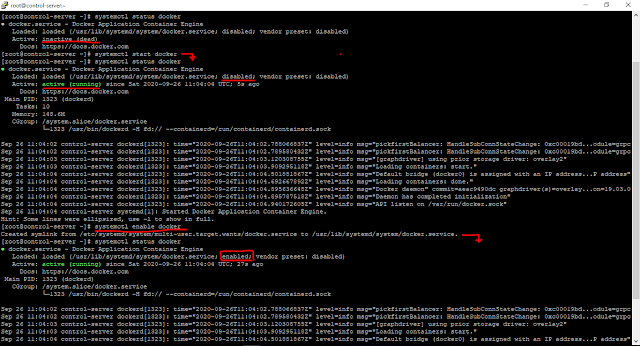

Now we will check the status of the Docker with the command in the box where Docker installed.

systemctl status docker is the command to check the Docker status.

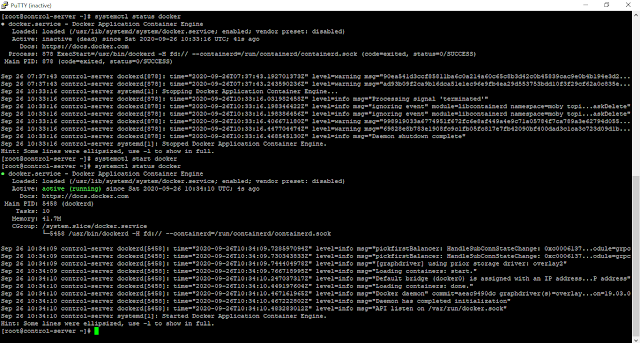

if it is not running. please issue the below command to start the Docker engine.

systemctl start docker to start the Docker Daemon/Docker Engine.

systemctl stop docker is to stop the Docker Daemon/Docker Engine.

systemctl enable docker is to run the docker daemon as a services,

output will be like:

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

when you execute this command it will get enabled and after rebooting of the linux box the docker daemon will up and running.

if it is not enable when ever system gets reboot or restart Docker daemon will get stopped.

so recommended to enable the docker after starting the docker.

now will understand how the docker commands work.

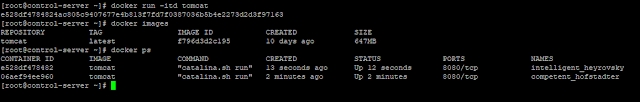

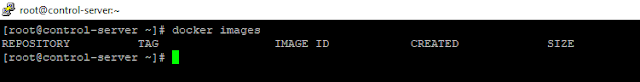

docker images -- gives us the list of images in the local(box)

im my linux box there is no images locally. if i run the container, first it will check the images list in locally then get it download from the docker hub.

docker run -itd tomcat -- docker run is the client command tomcat is the image name -itd is refers to interactive terminal detach mode. it means tomcat image will download it to the images locally and run the container as per the image.