Tech Capsule

Friday, July 21, 2023

CKA-EXAM-Reference

Wednesday, January 20, 2021

Script to search a word/Parameter from file and append.

In this post, i wanted to write a shell script to search a parameter/Word in a file and append the value if the word/parameter is not there in the file.

#!/bin/bash

grep -i "Pasupula" /tmp/Sravan/info.txt

if [ $? == 0 ]

then

echo " Already search content was there in the file"

else

echo -e " Sravanakumar_Pasupula from bangalore" >> /tmp/Sravan/info.txt

fi

Saturday, November 28, 2020

Docker - Container Lifecycle

In this post, we will explore in detail with different stages of docker containers flow. From creating containers to destroy containers, there are several stages , all these stages we can call it as Docker -Containers lifecycle.

following illustration gives the entire lifecycle of docker container.

|

| Docker Container Lifecycle |

- When you execute docker create with specific name and image, then container will be created with specified name, but not run the any container processes.(Created stage)

- Then run command will create a new container and run the image, then container will goes to Running Stage and the container processes will run.

- if we want to stop the running container processes, then the container stage will goes to Stopped stage and container processes will be stopped.

- if we want to start the topped container processes, then the container stage will goes to Running stage and container processes will be run.

- if we want to pause the running container process, then the container stage will goes to Paused stage. in this stage container process will be suspended., but not be running.

- if we want to unpause the suspended container process, then the container stage will goes to Running stage, and the container processes will run.

- if we want to remove the stopped container, then the container stage will goes to deleted stage. the container will be removed.

lets, have a practical stuff with these stages and get keen understandings.

1. Create container :

docker create command will create a new Docker container with the specified image.

syntax : docker create --name <container name> <image name>

example: docker create --name cont-1 ubuntu

Output:

2. Run Container :

docker run command will create a new container and run the image in the newly created container. (docker run command will do the both “docker create” and “docker start” command)

syntax: docker run -itd --name <container name> <image name>

example : docker run -itd --name cont-1 myubuntu:v1

Output :

3. Stop Container :

docker stop command will use to stop the container which are in running stage.

syntax : docker stop <container name>

example : docker stop cont-1

Output :

4. Start Container :

docker start command will use to start the container which are in stopped stage.

syntax : docker start <container name>

example : docker start cont-1

Output :

5. Pause Container :

docker pause command will use to pause/suspend the container which are in Running stage.

syntax : docker pause <container name>

example : docker pause cont-1

Output :

6. Unpause Container :

docker unpause command will use to run the container which are in paused stage.

syntax : docker unpause <container name>

example : docker unpause cont-1

Output :

7. Remove Container :

docker rm command will use to delete the container which are in stopped or Created stages.

syntax : docker rm <container name>

example : docker rm cont-1

Output :

docker rm -f command will use to delete the container which is in Running stage.

syntax : docker rm -f <container name>

example : docker rm -f cont-1

Output :

Tuesday, October 27, 2020

Ansible Gathering Facts

- Default Facts

- Custom Facts

Monday, October 26, 2020

AWS Assignment

AWS Assignment

Objective

At

the end of this assignment you will have created a web site using the

following Amazon Web Services: EC2, EBS, ELB and S3.

Stage 1: Building the EC2 web server.

- the instance should be of type t2.micro.

- the instance should reside within region ap-south-1(Mumbai) within availability zone ap-south-1a.

- the instance should use a 1 GiB attached EBS volume and contain a valid partition table with one partition. The partition should contain a valid file system,

- the file system residing on the EBS volume should be mounted automatically upon reboot of the EC2 instance.

- the instance should serve web pages via an appropriate service such as Apache or IIS ,This service should start automatically upon boot

- the instance should serve a web page "index.html" containing well-formed HTML displaying text "Hello AWS World" and display the screen shots created below in Stage 3(they will be hosted separately). HTML file should reside on the previously created EBS volume and be served as the default document from web server root.

- the instance should use Security Groups effectively to allow administration and serve HTTP requests only.

Stage 2: Building and

configuring the Elastic Load Balancer:

Create

an Elastic Load Balancer (ELB) with the following specification:

- the ELB should be created in the Singapore region.

- the ELB should accept HTTP on port 80.

- the Healthy Threshold for the ELB to be set to 2 seconds

- deliver traffic to the EC2 instance created in Stage 1.

Stage 3: Configuring S3

Create

a Simple Storage Service (S3) bucket with the following specification:

- bucket should be created in the ap-south-1 region.

- bucket should be publicly readable.

Place screenshots

in the S3 bucket, in png format, clearly showing

the following:

- The mounted EBS volume eg, using Windows Explorer or run from the console on a Linux host. This screen shot should be named as screen-shot1.

- The index.html file resides within EBS eg. using windows explorer or in Linux go to the mounted volume directory and run "pwd; ls –l". This screen shot should be named as screen-shot2.png

- The web server has been configured to serve index.html from the EBS volume as the default document eg the relevant section of the Apache configuration file or IIS Manager. This screen shot should be named as screenshot 3.png

Remember

to use the S3 URLS in the index.html file hosted on EC2 (see Stage

1).

DELIVERABLES

Please

provide to the communicated email id the following information

- the public DNS entry/ Public IP for the EC2 instance

- the public URL to the web page via the ELB

Solution

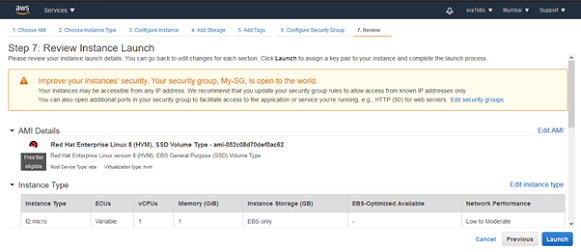

Stage 1: Building the EC2 web server:

Creating EC2 instance in Mumbai region with availability zone ap-south-1.

Select the instance type as t2.micro per the assignment.

As per the assignment chosen the availability zone as ap-south-1a

add tag as per our naming convient.in my case i added Name as Apache instance.

As per the assignment created the Security group and its traffic allow only on HTTP protocol. but added both http and https :)

Review and launch

Login to the created EC2 instance with root user

For disk partition per assignment please follow below steps.

yum install lvm2*

lsblk

fdisk -l /dev/xvdb

pvcreate /dev/xvdb1

mkfs.xfs /dev/myvg/myl

mkdir /appsdata

if you add the below entries in

/etc/fstab, after reboot of the instance/machine the mount point will be exists.

Compilation of apache software under the newly created /appsdata mount.

In order to compile the Source, I

took the source file from the apache foundation website. And followed the below

steps.

1. go to the

link : https://archive.apache.org/dist/httpd/

2. copy the

link which version you would like to download

3. wget

https://archive.apache.org/dist/httpd/httpd-2.2.34.tar.gz

4. tar -xvzf

httpd-2.2.34.tar.gz

5. cd

/httpd-2.2.34

6. Required

libraries has been installed to source execution.(gcc,apr)

7. ./configure --prefix=/appsdata/apache2.2.34 --enable-modules=all

8. make

9. make

install

By this we

will finished the apache compilation successfully, now we have to bring it up

from installed path

10. start

apache services from the path: /appsdata/apache2.2/bin/ and execute the below

command to start.

./apachectl -k start

11. To deploy

index.html on apache as below:

Go to the

documentroot path : /appsdata/apache2.2/htdocs

12. Create the index.html file

and add the below content as per the assignment

Vi index.html

<html><body><h1>"Hello

AWS World"</h1></body></html>

:wq!

13. now we

have to provide the URL in browser to test the result.

By this stage 1 has been completed.

Before going to stage 2, I would

like to create a AMI and copy to the Singapore region.

Copy AMI:

Copied AMI has to be launched in Singapore region by selecting the Singapore VPC and its availability zones.

Just Launch and follow the next steps to create the ec2 instance in Singapore region.

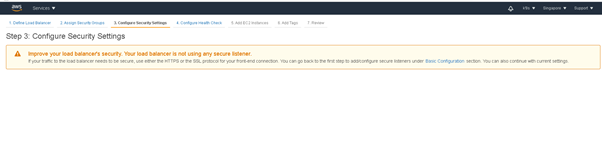

Stage:2 :Building and configuring the Elastic Load Balancer:

ELB created in the Singapore

region.

Go to Ec2 dashboard then click on Elastic Loadbalencers then Create

the new ELB.

In my case I have chosen CLB

define the loadbalencer and its security options.

choose the SG.

as we do not have any SSL settings , we can skip now for this step.

As per assignment healthy threefold set to 2 .

Add the instances which was

already lunched from the mumbai region AMI.

Then click on the create.

Then try to access t the below URL

my-elb-1190623872.ap-southeast-1.elb.amazonaws.com:80

Now we have successfully completed the stage:2

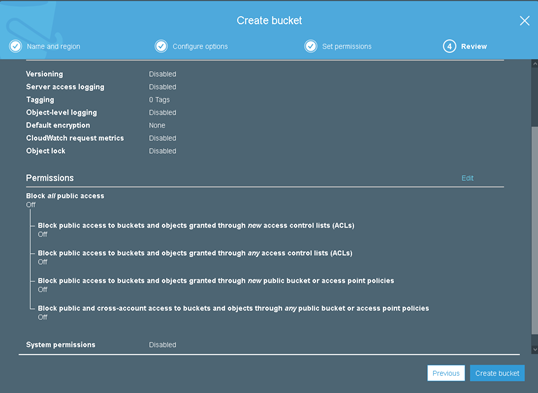

Stage 3: Configuring S3

Created

a Simple Storage Service (S3) bucket with the following specification from S3

dashboard:

·

Bucket has been

created in the ap-south-1(Mumbai) region.

·

Bucket

has been provisioned be publicly readable.

Leave as is.

It

should be public need to uncheck Block all public access

It

should be public need to uncheck Block all public access

Just review and click on create bucket

DELIVERABLES

Please

provide to the communicated email id the following information

·

the public DNS

entry/ Public IP for the EC2 instance (ec2-15-206-80-10.ap-south-1.compute.amazonaws.com)

·

the public URL to

the web page via the ELB (http://my-elb-1190623872.ap-southeast-1.elb.amazonaws.com/)

https://my-s3-bucket1.s3.ap-south-1.amazonaws.com/screen-shot1.png

https://my-s3-bucket1.s3.ap-south-1.amazonaws.com/screen-shot2.png